- What is Retrieval Augmented Generation in Artificial Intelligence?

- Key Differences Between LLM and RAG

- How Does RAG Work in AI - 3 Core Components

- RAG Applications in AI

- Open-Domain QA Systems

- Multi-Hop Reasoning

- Search Engines and Information Retrieval

- Legal Research and Compliance

- Comprehensive and Concise Content Creation

- Customer Support and Chatbots

- Educational Tools and Personalized Learning

- Context-Aware Language Translation

- Medical Diagnosis and Decision Support

- Financial Analysis and Market Intelligence

- Semantic Comprehension in Document Analysis

- Benefits of RAG in Artificial Intelligence

- Augmenting Memory Capabilities of LLMs

- Enhanced Accuracy and Relevance

- Time Efficiency

- Personalized User Experiences

- Incorporation of Domain-Specific Knowledge

- Efficient Knowledge Management

- Mitigation of Bias

- Enhanced Decision Support

- Boosting Source Credibility and Transparency

- Minimizing AI Errors and Inaccuracies

- Potential Challenges in RAG Implementation and Their Strategic Solutions

- Data Privacy and Security

- Quality and Reliability of Retrieved Data

- Scalability Issues

- Employee Resistance

- Future of Retrieval Augmented Generation in AI

- Enter into the Next Era of RAG AI with Appinventiv

- FAQs

The rapid evolution of artificial intelligence has transformed various aspects of our lives, from how we interact with technology and manage personal tasks to how businesses operate, make data-driven decisions, and deliver personalized customer experiences. Among the numerous AI advancements, the development of large language models (LLMs) stands out as a groundbreaking technique that generates human-like text and code with impressive accuracy.

However, these models often face challenges in integrating domain-specific knowledge and real-time data, which restricts their effectiveness and applicability across various industries.

This is where Retrieval-Augmented Generation (RAG) emerges as a favorable solution. RAG applications in artificial intelligence address the limitations of traditional models in incorporating domain-specific knowledge and real-time data. This innovative approach enhances the capability of AI systems to produce more accurate, relevant, and context-aware outputs, making them more effective and versatile across various applications.

What is Retrieval Augmented Generation in Artificial Intelligence?

RAG is an advanced technique in artificial intelligence that enhances the capabilities of LLMs. Its primary goal is to improve the accuracy, relevance, and quality of the responses generated by LLMs without the need to retrain the model.

Issues with the LLMs generated output that RAG AI helps overcome:

- LLMs provide incorrect information when they lack the correct answer.

- They generate responses based on non-authoritative sources.

- These AI models deliver outdated or generic information when users expect specific, current responses.

- LLMs provide inaccurate responses due to terminology confusion, where different training sources use the same terms to refer to various concepts.

RAG in AI development can efficiently overcome these challenges by extending the capabilities of LLMs.

For instance, imagine developing a virtual assistant to help customers with troubleshooting their smart home devices. A standalone LLM, without specific training on the technical details and user manuals of these devices, would struggle to provide accurate solutions. It might either be unable to respond due to insufficient information or, even worse, offer incorrect troubleshooting steps.

RAG in AI overcomes this limitation by retrieving relevant information from the technical manuals and support documents in response to a user’s query. It then provides this information to the LLM as additional context, enabling it to generate a more accurate and detailed response.

In a short and simple term, RAG applications in AI allow the LLM to “consult” the additional information to better formulate its answer.

Key Differences Between LLM and RAG

Though LLMs and RAG share some similarities in terms of natural language processing (NLP) and text generation, they also have some key dissimilarities that differentiate them from each other. Here is a concise table highlighting the key differences between LLM and RAG models in AI development:

| Aspect | LLM | RAG |

|---|---|---|

| Core Functionality | Generates text based on pre-existing training data. | Generates text by integrating real-time retrieved information. |

| Data Dependency | Relies on the data it was trained on, which may become outdated. | Retrieves current and relevant data from external sources. |

| Application Scenarios | Suitable for general knowledge tasks where real-time data is not important. | Ideal for applications needing precise, context-aware, and up-to-date information. |

| Scalability | Requires periodic retraining with new data. | Continuous data retrieval reduces the need for frequent retraining. |

| Accuracy & Relevance | Limited to the accuracy of the training data. | Enhances accuracy and relevance by incorporating the latest data. |

| Versatility | Used for chatbots, content creation, translation, etc., but may fall short in providing the latest information. | Used for customer support, decision support, and personalized content, where up-to-date, relevant data is crucial. |

How Does RAG Work in AI – 3 Core Components

In its most basic form, RAG in AI works by combining three core components — Retrieval, Augmentation, and Generation. These core components of RAG for AI development address the limitations of LMM and offer significant benefits to businesses.

- Retrieval Mechanism—RAG gathers relevant information from a vast pool of data sources, including proprietary databases, documents, and the Internet.

- Augmentation Process—The model enriches the retrieved information by adding context, background knowledge, and details that enhance its relevance and utility.

- Generation Model—Based on the augmented dataset, the AI RAG produces outputs and enables LLM to generate more accurate and contextually aware content.

RAG Applications in AI

RAG in AI has demonstrated versatility across various domains, enhancing the accuracy, relevance, and contextuality of LLM-generated outputs. Here are some key applications of the RAG model in AI:

Open-Domain QA Systems

RAG enables AI to excel in open-domain question answering by retrieving relevant data from vast, diverse sources and integrating it into responses. This capability allows AI to answer complex, unstructured, and natural questions more accurately, providing detailed and contextually relevant information even for queries that span multiple domains.

Multi-Hop Reasoning

RAG in AI development empowers the model to perform multi-hop reasoning for tasks like complex QA and procedural text generation. This is especially crucial in scenarios where answers require synthesizing information from disparate sources to arrive at a logical conclusion, such as in legal case analysis or scientific research.

Search Engines and Information Retrieval

Retrieval Augmented Generation in AI significantly enhances search engines and information retrieval systems by integrating contextual information directly into search queries. This approach not only boosts the relevance and accuracy of search results but also fine-tunes them to better match user intent, thereby elevating the overall search experience.

Legal Research and Compliance

For legal professionals, RAG models streamline research processes by retrieving and analyzing pertinent case laws, statutes, and regulatory information. This capability supports informed decision-making and ensures compliance by providing accurate and up-to-date legal knowledge.

You may like reading: Why and how to develop legally compliant AI products

Comprehensive and Concise Content Creation

RAG models play a pivotal role in enhancing both content creation and summarization by seamlessly integrating relevant information from a wide array of sources, making it more accurate and comprehensive. Furthermore, RAG retrieves key points from extensive texts and synthesizes them into concise summaries, enhancing information accessibility and efficiency.

For instance, in the healthcare industry, medical researchers can use RAG models to automatically generate high-quality articles, reports, and other textual content or condense lengthy clinical trial reports into concise, easily digestible formats.

Customer Support and Chatbots

RAG in artificial intelligence retrieves and integrates specific information from knowledge bases or FAQs to deliver accurate and helpful responses to user inquiries. This feature of RAG in AI ensures that conversational agents, like chatbots and virtual assistants, provide users with more informative, personalized, and engaging experiences, enhancing interaction quality and user experiences.

For instance, Tootle partnered with Appinventiv to build an intelligent agent that addresses a wide range of users’ general queries about facts, helping them make an informed decision.

Also Read: How Will AI Improve Customer Experience

Educational Tools and Personalized Learning

RAG in AI-driven education systems facilitates personalized learning experiences by tailoring educational content to individual learner needs. By retrieving and integrating relevant educational resources, these tools enhance engagement and learning outcomes for students and learners across various subjects and levels.

Context-Aware Language Translation

RAG significantly enhances language translation by incorporating contextual information throughout the translation process. By retrieving relevant data and integrating it into translations, RAG ensures that translations are more accurate, contextually aware, and culturally appropriate. Whether in technical documentation, medical records, or legal texts, RAG’s context-aware translations ensure clarity and precision, making it particularly beneficial for technical or specialized fields where precise terminology and domain-specific knowledge are crucial.

Medical Diagnosis and Decision Support

In the healthcare domain, RAG models help retrieve and synthesize the latest medical literature, clinical guidelines, and patient data. They significantly aid medical professionals in precise diagnosis, personalized care, and accurate treatment recommendations, enhancing clinical decision-making, patient outcomes, and operational efficiency.

Financial Analysis and Market Intelligence

RAG AI enhances financial analysis by retrieving and analyzing current market trends, competitor data, news articles, and economic indicators. This advanced capability enables more informed decision-making in investment strategies and business planning. Furthermore, by leveraging comprehensive and up-to-date financial insights, RAG AI in finance ensures that businesses have access to accurate and up-to-date data, which helps optimize operational processes and drives business growth.

Semantic Comprehension in Document Analysis

RAG applications in AI enhance the semantic understanding of documents by integrating external data sources that provide context. This allows AI to interpret and analyze documents with greater depth and accuracy, making it invaluable for industries that require precise document comprehension, such as legal, medical, and academic fields.

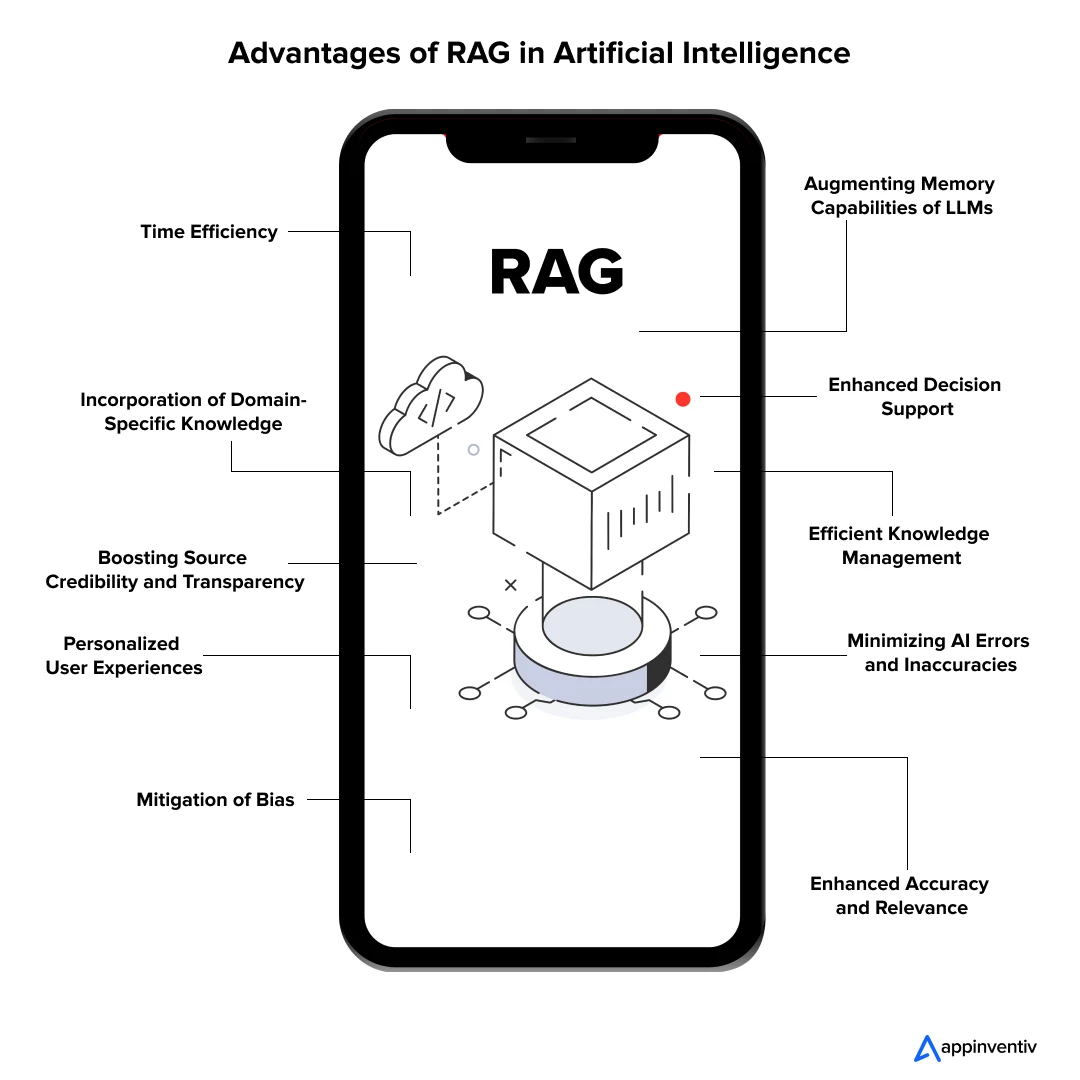

Benefits of RAG in Artificial Intelligence

RAG implementation in AI development offers numerous advantages that help enhance the performance, versatility, and applicability of AI systems, making them more effective in a wide array of applications. Here are some key benefits of RAG in AI development:

Augmenting Memory Capabilities of LLMs

RAG enhances the memory of large language models (LLMs) by allowing them to access and integrate relevant data from external sources. This results in improved retention of contextual details and historical information, enabling LLMs to generate more informed and comprehensive responses over extended interactions.

Enhanced Accuracy and Relevance

By integrating contextually relevant information from diverse sources, RAG significantly improves the accuracy of AI-generated responses. This ensures outputs are precise and directly relevant to specific queries or tasks at hand.

Time Efficiency

RAG optimizes the efficiency of AI systems by quickly retrieving and integrating relevant information from various sources. This reduces the time required for content creation, query response, and decision-making processes, allowing professionals to focus on more value-added activities.

Personalized User Experiences

By retrieving and utilizing user-specific information, RAG enables AI systems to deliver personalized interactions and recommendations, enhancing content engagement and user experience.

Incorporation of Domain-Specific Knowledge

Access to domain-specific or proprietary information makes RAG-equipped AI models highly effective in industry-specific applications. This is particularly valuable in healthcare, legal, and finance, where domain-specific knowledge is of utmost importance.

Efficient Knowledge Management

RAG supports efficient knowledge management by integrating information of various sources into centralized databases. This facilitates easy retrieval and utilization of knowledge, thereby enhancing decision-making and productivity within organizations.

Mitigation of Bias

One of the key benefits of RAG in AI development is its ability to mitigate biases. By retrieving diverse information from multiple sources, RAG provides a balanced perspective, promoting fair and responsible use of AI systems.

Related Article: Explainable AI benefits every AI enthusiast should know

Enhanced Decision Support

In decision support systems, RAG plays a crucial role by synthesizing relevant data to inform decision-making processes. This is especially valuable in healthcare, finance, and legal services, where timely and accurate decisions are essential.

Boosting Source Credibility and Transparency

With RAG in AI development, the systems can provide clear references to the data used in generating responses. This improves transparency and trustworthiness, as users can verify the origins of the information and understand the basis for the AI’s output, facilitating greater confidence in AI-generated content.

Minimizing AI Errors and Inaccuracies

RAG helps reduce hallucinations—instances where AI generates inaccurate or fabricated information—by grounding responses in actual retrieved data. By relying on verifiable sources, RAG minimizes the likelihood of producing erroneous outputs, enhancing the reliability and correctness of AI systems.

Potential Challenges in RAG Implementation and Their Strategic Solutions

When implementing RAG in Gen AI or AI development solutions, businesses may encounter several challenges that can impede their path to smooth AI adoption and digital transformation. Below, we explore some of the most common obstacles and their strategic solutions, ensuring the effective integration of the RAG model in AI development.

Data Privacy and Security

Challenge: Integrating external data sources through RAG can raise concerns about data privacy and security, particularly when handling sensitive or proprietary information.

Solution: Implement stringent security measures such as access controls and data encryption to ensure that sensitive information is protected. Additionally, adhere to data protection regulations and conduct regular security audits to address potential vulnerabilities.

Quality and Reliability of Retrieved Data

Challenge: The effectiveness of RAG depends on the quality and reliability of the retrieved information. Low-quality or unreliable data can lead to inaccurate or misleading AI outputs.

Solution: Use advanced data cleaning, normalization, and validation techniques to ensure that only high-quality and authoritative sources are used. Regularly update and maintain data sources to ensure they remain relevant and reliable.

Scalability Issues

Challenge: As the volume of data and the complexity of queries increase, scaling RAG systems to handle large-scale operations can become challenging.

Solution: Design RAG systems with scalability in mind. For instance, utilize cloud-based solutions to handle large volumes of data and high query loads. Optimize algorithms and processes to improve efficiency and performance.

Employee Resistance

Challenge: Another major roadblock is employee resistance and skepticism regarding the benefits of RAG. They may also feel overwhelmed by the need to learn new skills and adapt to new systems.

Solution: Provide comprehensive training and support to help employees understand the benefits and use cases of RAG in AI development. By facilitating a culture of collaboration and support, organizations can mitigate resistance and ensure the successful adoption of RAG AI.

Future of Retrieval Augmented Generation in AI

RAG applications in AI are presently utilized to deliver real-time, accurate, and contextual responses in conversational applications such as chatbots, email, and text messaging. These initial implementations of RAG in AI models showcase the transformative potential of RAG in enhancing interaction quality, thereby improving the accuracy and relevance of AI-generated answers.

However, the future of RAG AI promises even greater advancements, expanding its role from merely responding to queries to performing complex and impactful tasks. In the coming years, retrieval augmented generation in AI development will be capable of initiating context-driven actions, significantly enhancing efficiency and user convenience.

For example, future RAG applications could manage the entire booking process for a vacation rental based on user preferences and specific events or recommend and assist with enrolling in educational programs aligned with an individual’s career path. This evolution will transform AI from a passive information provider to an active facilitator in decision-making processes.

Enter into the Next Era of RAG AI with Appinventiv

RAG applications in AI development offer practical solutions to complex challenges by combining retrieval and generation-based methods, enhancing the accuracy, relevance, and efficiency of AI systems. This innovative approach is poised to revolutionize various industries, including education, research, finance, healthcare, content creation, legal services, customer support, and more, by providing more precise and contextually relevant outputs.

Appinventiv, a trusted AI development company, leverages RAG to deliver cutting-edge AI solutions. Our experience and expertise in implementing advanced technologies such as Transformers and RAG in AI models ensures that your business stays ahead in today’s tech-driven age.

For instance, our esteemed clients, such as Mudra, Tootle, JobGet, Chat & More, HouseEazy, YouComm, and Vyrb, partnered with us to build next-gen RAG AI applications and achieved remarkable success in the digital landscape.

Want to get a real-life example of RAG in AI that illustrates our experience in leveraging this innovative approach? Seek insight from Gurushala – an AI-powered online learning platform that partnered with us to bring a positive change in the education system. Here is a pictorial presentation of the approach we followed in implementing RAG in Gurushala

By partnering with us, you gain access to a pool of 1500+ tech professionals who will empower your business to harness the full potential of RAG in today’s AI era and drive unprecedented success.

Embrace the future of RAG AI with us and experience the transformative impact of this new frontier on your business. Contact us to learn how we can turn your visionary ideas into groundbreaking realities.

FAQs

Q. What is RAG in generative AI?

A. RAG applications in generative AI allow systems to access and leverage an organization’s proprietary information in conjunction with the vast troves of knowle dge available on the Internet. RAG AI bridges the gap between retrieving relevant information and generating insightful, context-aware responses. By combining the strengths of retrieval-based models and generative models, RAG ensures that the content created is not only legible but also contextually appropriate and factually correct.

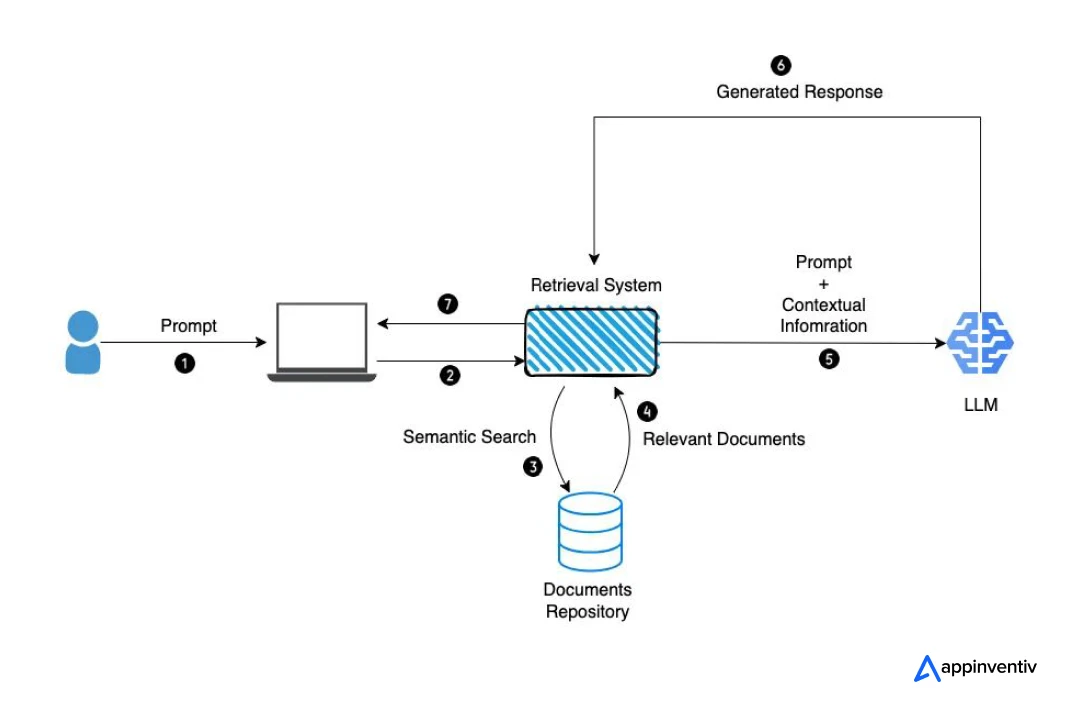

Q. How does RAG work in AI?

A. To understand the working mechanism of the RAG model in AI development, it is necessary to first know what RAG is in AI. RAG is a cutting-edge approach in AI that combines retrieval-based and generation-based methods to enhance the performance and accuracy of large language models.

Here is how it works:

Query Generation: The process starts with an input query that needs to be answered or a task that requires completion.

Information Retrieval: The system retrieves relevant information from a large database and finds the most relevant information related to the query.

Context Integration: Retrieved information is then integrated into the input context, providing the generation model with pertinent data to improve output quality and relevance.

Response Generation: Using the enriched context, the generation model such as ChatGPT produces responses that are more accurate and contextually relevant.

Q. Can a RAG cite references for the data it retrieves?

A. Yes, a RAG model can cite references for the data it retrieves, providing sources and improving the credibility and transparency of its generated responses.

Q. How does the RAG mechanism work?

A. The RAG mechanism works by first retrieving relevant information from an external knowledge base or database in response to the user’s prompt. This retrieved data is then used to augment the LLM’s response, allowing it to produce more accurate and contextually relevant outputs by combining this information with its inherent generative capabilities.

How Much Does It Cost to Build an AI Trading App Like Moomoo?

Trading apps have undoubtedly solved the major pain point of the public - eliminating middlemen from investing their money, Which keeps them in fear that they might get cheated or lose their money. However, trading apps have facilitated users with the transparency to perform trading safely and swiftly. In the era of smartphones and AI,…

15 Explorative Use Cases of AI in Mortgage Lending

In an era where technological advancements are revolutionizing every sector, mortgage lending has been slow to embrace change. The industry has been bogged down by outdated processes, increasing operational costs, and regulatory pressures. However, with the introduction of AI in mortgage lending industry, a shift is occurring that promises to address these pain points while…

How to Develop AI Medical Transcription Software? Costs, Process, and Benefits

Developing accurate and efficient medical transcriptions manually has always been a painstaking process, fraught with many challenges. Manual transcription often leads to errors, misinterpretations, delayed patient care, and the high costs associated with hiring skilled professionals. As the volume of medical data grows, the pressure to maintain accuracy without compromising efficiency intensifies. It's time to…